My talk from #SPSADL - Jumpstart your Folksonomy with Text Mining

Yesterday I attended, and was lucky enough to speak at, the second inaugural SharePoint Saturday Adelaide. It was an awesome event and I just wanted to say a big thanks to Brian Farnhill (blog | twitter) and Bruno Lanceleaux (blog | twitter) for organising it and letting me speak.

I presented at one of the the last sessions of the day on using the Text Mining features of SSIS to rapidly create a Folksonomy with Managed Metadata in SharePoint 2010. The talk was in two parts, the first being an overview of Managed Metadata Services in SharePoint 2010, the second half was a proof of concept for using the Text Mining features in SSIS to extract terms from Word 2007/2010 documents (.docx). In speaking afterwards with Adam Cogan (blog | twitter) he thought I was missing a good story to really sell the concept, and as I’m happy to take free advice here it is:

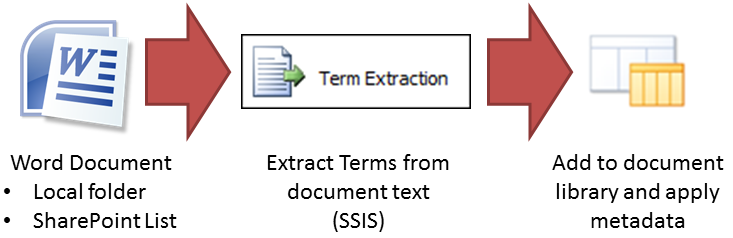

So, I’ve got a large amount of Word documents (.docx) in a file share or SharePoint document library and I really want to use the new Managed Metadata Services in SharePoint 2010. Due to the large amount of documents I need a quick way of creating a set of Terms in the Term store and then tag the appropriate Term to a document.

The proof of concept was to use the out of the box Text Mining (Term Extraction) feature in SQL Server Integration Services to discover the key terms in each document, upload the documents to a document library and then apply the terms to a Managed Metadata column.

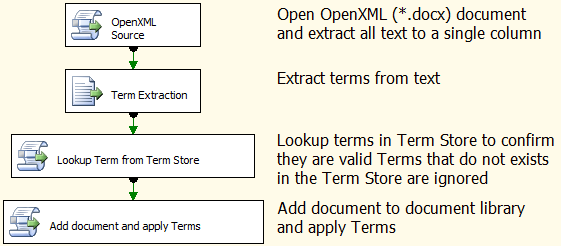

The solution is made up into the following three SSIS packages:

Step 1

-

Open a word document using the OpenXML v2 SDK

-

Get the key terms using the Term Extraction transform

-

Insert the Terms and Score into a SQL Server table

Step 2

-

Select the terms from SQL Server

-

Add the terms to a Term Set in Managed Metadata Services (SharePoint)

Step 3

-

Open the word document using OpenXML v2 SDK

-

Extract the Terms and compare them to the Terms in the Term Set

-

Upload the Word document to a Document Library and apply the Managed Metadata

Although the overall process could easily be done in one single package, I’ve deliberately split it up in to the three parts. This was done to allow for extra (manual) analysis to be undertaken between each step. From my example set of 44 documents the Term Extraction transform identified just over 2100 terms, with a large percentage of them being rubbish. If I had allowed them all to be added to a term store it would make managing them a pain. Taking the time to get your terms in good order first, then uploading and then tagging the documents will save time in the long-term.

The full solution can be downloaded from here (.zip 94KB). Don’t hesitate to ask any questions, and if you find this useful then please let me know.